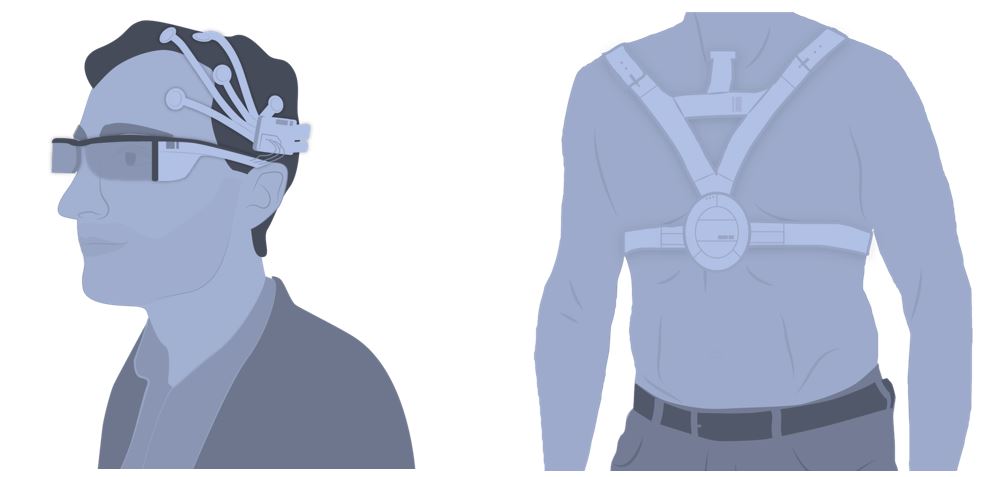

Figure 1: Examples of envisioned sensory amplification systems: Amplified visual perception that lets users control zoom, perspective, spectrum, and speed using gaze-based attention, EMG, and EEG (left) and haptic stimulation device that allows mapping of information onto tactile and electrical patterns to create a sensory experience and artificial reflexes (right)

Scenario 1: Amplified Visual Perception

Human visual perception strongly defines how we experience our physical and social environments. What a person sees is limited by the individual’s physiological abilities (e.g., temporal resolution, spectrum, and acuity). What is seen and perceived can be controlled through muscle movement (e.g., head or eye movement) and through psychological factors (e.g., priming). Our vision is to create an amplified visual perception system that overcomes classical limitations of human vision and can be intuitively controlled.

The prototype device will consist of multiple cameras that provide a wide spectrum of visual information (e.g., visible light and thermal vision) and a range of perspectives (e.g., view to the back and third-person perceptive). The device will allow users to zoom into regions, and will offer a variable capture speed and freezing of an image for close observation. To control the system eye gaze, muscle activity (EMG), and brain signals (EEG) using a brain-computer interface (BCI) will be used, allowing implicit and explicit intuitive control. The output device takes current augmented reality systems as the starting point, see Figure 1.

Scenario 2: A New Sense for Rain and One for Lack of Exercise

Many information sources, such as traffic data, weather forecasts, number of steps walked, and the activity and location of friends are available and can be easily accessed. Mobile apps and websites are presenting such information. The proposed research, however, will assess the feasibility of creating a new sense that can convey information to the human, subconsciously building on the concept of sensory substitution. With such an experimental system, the hypothesis that new technical senses can be developed can be tested.

In the project we will create a prototype for a platform that can enable new senses. One sense to be implemented will use current weather forecast data and personal activity and movement data and provide stimuli to the body representing this information. This prototype will be designed to be worn over a long term (e.g., several weeks to months). It is expected that over time the human user will acquire a new sense that does not require explicit cognitive processing of the information. See Figure 1 for a sketch.

There will be no control interface for the rain sense, as it is expected to be there all the time. For the lack of exercise sense, a link back to the monitored exercise will be implemented. Once the user becomes aware of the lack of exercise through the actuators and she starts moving or doing an activity, the actuation stops. We expect this control loop to help us understand if it is feasible to create senses that imply actions, which again require little or no cognitive processing, conceptually similar to a reflex.